As part of ION’s extensive benchmarking of the SR-71mach5 SpeedServer platform, ION uncovered a memory leak in Windows Server 2012 R2 acting as an SMB3 File Server under very heavy load. There does not seem to be an issue with larger blocks or when the queue depth is low, but you can watch it in action here while serving 8kB random reads with 16 I/Os outstanding.

Microsoft Windows Server 2012 R2 did not originally display this issue, as far as we are aware, but included it with some Windows Update that occurred during 2016. Windows Server 2016 does not seem to suffer from the same problem.

The system under test is ION Computer Systems’ SR-71mach5 SpeedServer . The benchmark tool is IOmeter 1.1.0, 64-bit version for Windows. The SR-71mach5 SpeedServer is an all-SSD server, with three RAID 5 arrays, each holding (5) 1.5TiB partitions. All (15) SSD RAID 5 partitions are shared. The server includes (12) ports of Intel 10Gb server NICs, all on the same subnet. 5 Clients take part in this test. Each client is a dual-processor with Intel Xeon processors and (2) 10GbE Intel server NICs. (4) clients are running Windows Server 2012 while the remaining system runs Windows Server 2012 R2. Partitions are shared by the server and mounted by the clients using SMB3. The only tuning done is to enable 9014-byte jumbo frames on all 10GbE NICs on both server and clients. Each share is in mounted and in use by only one client.

IOmeter is configured with a dynamo manager on each of the 5 clients. 6 workers are configured on each of those 5 clients with 2 workers per mounted share. Outstanding IOs is set to 16.

The frame rate is low for this screen capture, but it is real time. During the 64kB random read test, performance is great but nothing interesting happens, so wait another minute. About half-way through the 1 minute test of 8kB random reads, you can see the memory usage graph in Task Manager start to ramp. In under one minute, the non-paged pool has consumed all available RAM and performance has dropped from 700,000 IOPS for 8kB random reads to about 240,000 IOPS for 4kB random reads. (Sorry, we put task manager on top to watch the memory usage grow and that hid the IOPS performance during the rest of the 8kB test.) As we have noted before, SSD performance stresses many aspects of a system where issues were previously unknown. You can refer to ION’s previously published benchmark results to see how the performance with 8kB and 4kB random reads compared back in March 2015.

The only memory leaks that ION could find documented described the non-paged pool growing by 1GB per day. This capture shows non-paged pool growing by about 62GB in about 1 minute.

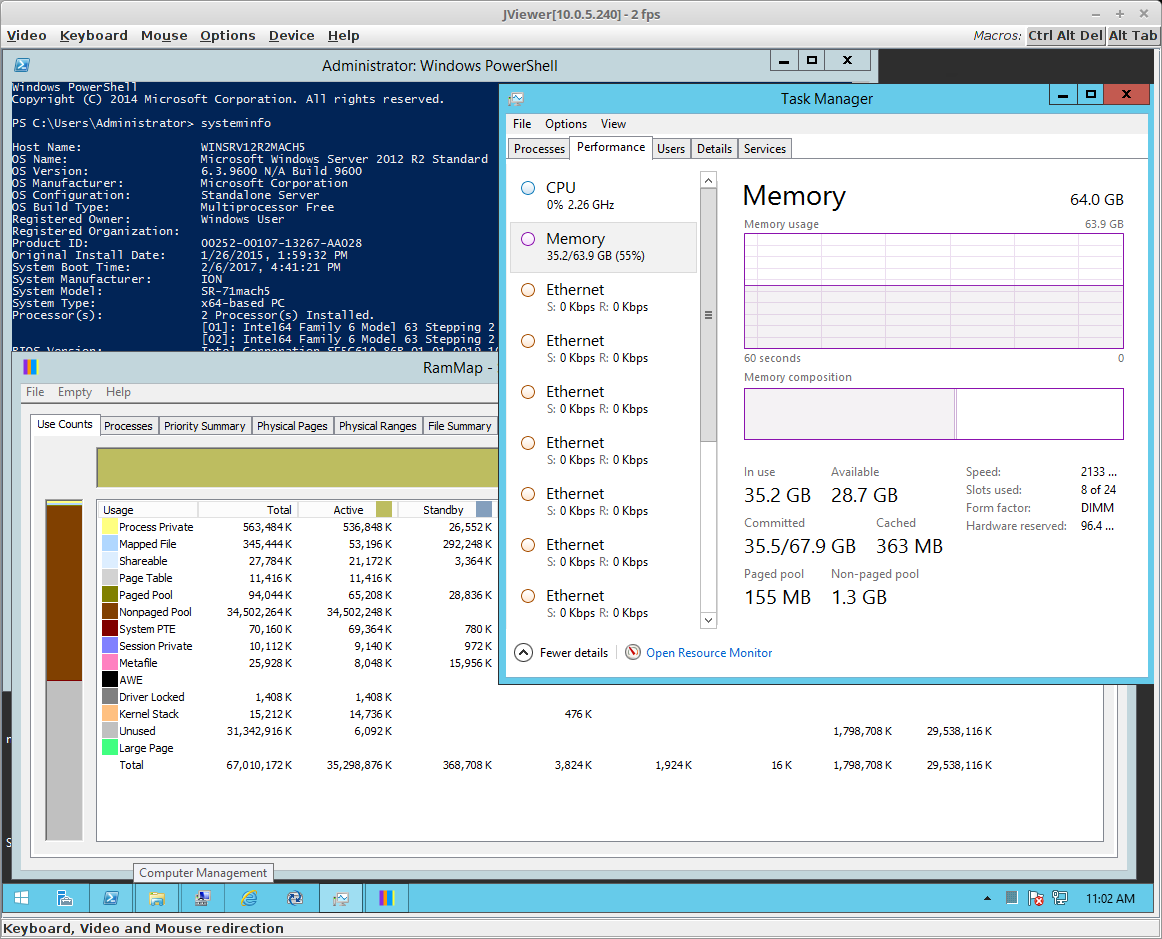

When we checked back on this system 18 hours later, TaskManager still shows over half of memory in use, but shows only 1.3GB in the non-paged pool. SysInternal’s RamMap, however, still shows 34GB in the non-paged pool.

When IOmeter is run locally, with all managers and all workers ON the SR-71mach5 SpeedServer, using similar parameters, the non-paged pool stays steady at less than 500MB, even on a long sweep with over 2 hours per test including 64kB Random Write, 64kB Random Read, 32kB Random Read, 16kB Random Read, 8kB Random Read, 4kB Random Read, 8kB OLTP, and 64kB OLTP. We believe that this suggests that the memory leak is somewhere in file services, SMB3, or elsewhere in the network stack.